fredrikj.net / blog /

What’s new in FLINT 2.3

July 2, 2012

After more than a year of development, version 2.3 of FLINT (Fast Library for Number Theory) is finally done! FLINT 2.3 contains a huge number of new modules and functions, bug fixes, and performance improvements, thanks to contributions from more than a dozen people.

An overview of the major changes can be found in the mailing list announcement, and a comprehensive list is given in the NEWS file.

In this blog post, I will talk about some of the major new features in more detail. I will focus disproportionately on features I worked on myself, and I apologize in advance to the other developers for this! Before anything else, I want to thank Bill and Sebastian for the huge amount of work they put into this release (and all the other contributors as well).

Soon available in a Sage near you

FLINT is, crucially, used in Sage as the default backend for polynomial arithmetic over the integers and rational numbers among other things.

The FLINT 2.x series is a complete rewrite of FLINT 1.x, providing cleaner and faster code and also many new features. Unfortunately, Sage still uses the nearly three years old FLINT 1.5. The work to update FLINT in Sage has been delayed due to backward incompatible changes from 1.x to 2.x as well as the fact that many essential functions in FLINT 1.x had not been ported over to the 2.x series as of FLINT 2.2.

However, FLINT 2.3 adds all the missing functionality, and patches to Sage for the interface changes have been written and are essentially just waiting for review, so hopefully the next version of Sage will contain FLINT 2.3. Later, we also want to wrap all the new 2.x functionality in Sage (help with this would be welcome).

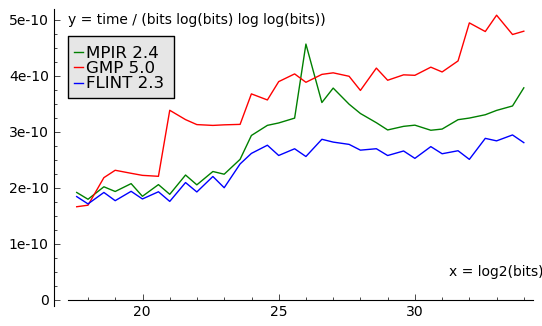

New FFT

Bill Hart finally finished his new integer FFT. The code is significantly faster than both MPIR 2.4.0 and GMP 5.0.2 for huge multiplications. For example, a multiplication of integers with $2^{34} \approx 17 \times 10^9$ bits takes 485 seconds with MPIR, 614 seconds with GMP, and 360 seconds with the new FLINT FFT. It also has various technical advantages such as being easier to generate tuning parameters for. The following plot shows the timings for balanced multiplications of integers up to $2^{34}$ bits, normalized by dividing by the theoretical complexity $O(b \log b \log \log b)$:

Bill also added Schönhage-Strassen polynomial multiplication which makes use of the new FFT. This speeds up multiplication of polynomials over $\mathbb{Z}$ and $\mathbb{Q}$ (and indirectly, many other operations) when the degrees and magnitudes of coefficients both become large. Until now, FLINT 2.x only used bit packing (Kronecker substitution) for huge multiplications, which is good but optimal only for small coefficients.

On my laptop, the speedup on benchmark problems compared to FLINT 2.2 is typically around 30 percent, but can be as much as a factor two in extreme cases. Here are two concrete benchmarks:

- The time for computing the Swinnerton-Dyer polynomial S12 (which has degree 4096 and coefficients with roughly the same number of decimal digits) dropped from 19 seconds to 14 seconds.

- The running time of a program I wrote for computing the Bernoulli numbers up to B10000 using $\mathbb{Q}[[x]]$ arithmetic dropped from 35 seconds to 26 seconds. (The current code in FLINT 2.3 for Bernoulli numbers uses a slightly different algorithm and takes 29 seconds.)

Right now, the new FFT is only used for polynomial multiplication and not for integer multiplication (FLINT simply calls integer multiplication functions in MPIR). The plan is to integrate the new FFT in a future version of MPIR (and later BSDNT). Then, the new MPIR version will speed up operations in FLINT that depend on integer multiplication, including polynomial multiplication in the range where bit packing is more efficient than the Schönhage-Strassen algorithm. To illustrate, Bill reported that the time for computing one million terms of the delta function q-expansion takes 3.0 seconds with FLINT 2.3 and the new FFT for integer multiplication, compared to 3.8 seconds with the FFT from the latest version of MPIR. Something to look forward to!

Improvements to the $p$-adic module

The module padic, added in FLINT 2.2, provides a highly optimized implementation of $\mathbb{Q}_p$, the field of $p$-adic numbers for arbitrary-precision $p$. Over the last year, Sebastian Pancratz has substantially improved the code and added new features.

Supported operations now include the usual arithmetic and conversion functions and inverse, square root, Teichmuller lift, logarithm and exponential. The FLINT functions are typically an order of magnitude faster than Magma, and some algorithms are asymptotically faster. The exponential function and logarithm use an efficient $O(n^2)$ basecase algorithm that only performs $O(n^{1/2})$ $p$-adic inversions and multiplications at precision $O(p^n)$, and for large $n$ switch to an asymptotically fast $O(n \log^2 n)$ algorithm based on binary splitting. As an example, FLINT computes a 17-adic logarithm to precision $O(17^{131072})$ in 2.4 seconds whereas Magma takes 2 hours 43 minutes (a speedup of 5500x).

Please note that although the code in the padic module is of high quality, it should be considered experimental in the sense that we probably will be making backward-incompatible changes to the interface in a future release. Sebastian is also working on modules for polynomials, matrices, and extension fields over $\mathbb{Q}_p$, which are not included in the current release (see his padic branch). Moreover, Andrés Goens is working on adapting Sebastian’s extension field code to implement arithmetic and polynomials over finite fields $\mathbb{F}_q$ for arbitrary-precision $q = p^n$.

Partitions

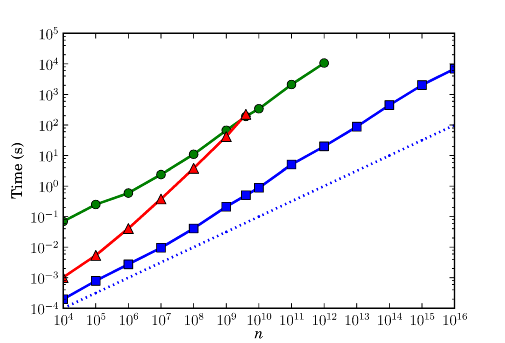

FLINT 2.3 includes my new implementation of the partition function $p(n)$, previously discussed on this blog and detailed further in a forthcoming paper (a preprint is available). Briefly, the FLINT partition function uses the Hardy-Ramanujan-Rademacher formula, implemented in such a way that the complexity is a quasi-optimal $O(n^{1/2+\varepsilon})$ and using various low-level optimizations. As a result, it runs some 500 times faster than all previously available implementations (that we are aware of).

The following nice image, which I’ve posted before, is a loglog plot of the time needed to compute $p(n)$ using Mathematica 7 (green circles), Sage 4.7 (red triangles), and FLINT (blue squares). The thin dotted line indicates the slope of the trivial lower complexity bound $\Omega(n^{1/2})$ just for writing out the result. A quick extrapolation suggests that $p(10^{19})$ would take about a decade to compute with Mathematica and a million years with Sage, after patching Sage to allow $n$ larger than 32 bits (Magma, not included, would take around $10^{10}$ years).

I have used the FLINT partition function to set a world record by computing the largest exact value of the partition function to date, $p(10^{19})$, a number with 3,522,804,578 digits. I also used it to compute 22 billion Ramanujan-type congruences, i.e. parameters $A,B,m$ such that $p(Ak+B) \equiv 0 \bmod m$ for all $k$, using an algorithm of Rhiannon Weaver (previously a few thousand congruences had been computed explicitly).

As a byproduct of working on the partition function, I also added code for efficiently evaluating Dedekind sums, computing the minimal polynomial of $\cos(2\pi/q)$, generating Chebyshev and cyclotomic polynomials, and a few other things, which may find other uses.

Polynomial GCD

Sebastian has ported the “half GCD” implementation from FLINT 1.x for polynomial GCDs over $\mathbb{Z}/n\mathbb{Z}$ (for word-size $n$) to the FLINT 2.x nmod_poly module. Actually, I should probably say that he has rewritten it, because the new code is much more streamlined. This fixes an important performance regression in FLINT 2.x compared to FLINT 1.x, making GCDs quasi-linear instead of quadratic. It also speeds up GCDs of huge integer and rational polynomials, which use a multimodular algorithm.

The crossover point of half GCD versus the classical Euclidean algorithm occurs for polynomials of degree a few hundred. For polynomials of degree several thousand, the code is about as fast as the FLINT 1.x GCD, but interestingly, the basecase Euclidean GCD is much faster in FLINT 2.x than in FLINT 1.x.

Power series composition and reversion

FLINT 2.3 adds functions for composition and reversion of power series, i.e. composition of polynomials mod $x^n$, over $\mathbb{Z}$, $\mathbb{Z}/p\mathbb{Z}$ (for word-size $p$) and $\mathbb{Q}$.

Composition uses an asymptotically fast algorithm of Brent and Kung; in fact, it uses the theoretically slower out of two algorithms given by Brent and Kung, but this algorithm is faster for practical lengths since the theoretically expensive operation is a matrix multiplication which in reality costs almost nothing compared to the other operations. A function for modular composition modulo an arbitrary polynomial over $\mathbb{Z}/p\mathbb{Z}$ has been added as well.

Reversion uses a new algorithm that I came up with last year, which generally beats Newton iteration by a constant factor (see my paper here for details).

The complexity of all these operations is about $O(n^{1.5})$ (theoretically $O(n^{1.91})$ for the matrix multiplication), compared to $O(n^2 \log n)$ for Horner’s rule with fast multiplication, and $O(n^3)$ for entirely classical algorithms.

A timing example: taking $f(x) = x + 2x^2 + 3x^3 + 4x^4 + \ldots$, FLINT 2.3 computes $f(f(x))$ to order $O(x^{1000})$ over the integers in 0.44 seconds (Magma and Sage take 5 and 13 seconds respectively), and it computes the reversion $f^{-1}(x)$ to the same order in 0.24 seconds (Magma and Sage both take around 20 seconds). The advantage of FLINT grows further with increased lengths.

Another timing example: taking as input $x \, \exp(x) = x + x^2 + x^3/2 + \ldots$ whose compositional inverse is the Lambert W function, FLINT 2.3 computes the reversion over the rationals to order $O(x^{1000})$ in 19 seconds. This is only a factor ten slower than the code I posted a while back which exploits the closed form of the input (effectively solving a differential equation). Magma is 100 times slower than FLINT 2.3, taking half an hour, and Sage and Mathematica are probably another order of magnitude slower than Magma (I did not wait for them to finish).

Polynomials with arbitrary-precision modulus

Sebastian has written a new module fmpz_mod_poly for polynomials over $\mathbb{Z}/n\mathbb{Z}$ with arbitrary-precision modulus $n$. The module supports all the usual basic arithmetic operations, as well as polynomial division and composition based on fast divide-and-conquer algorithms. The module also includes some more advanced features, such as fast radix conversion. There is code for computing greatest common divisors, but I believe only the ordinary Euclidean algorithm is used at this moment. The Euclidean algorithm is, of course, good enough as long as the polynomials have reasonably small degree (below a hundred, say) since no coefficient explosion occurs in $\mathbb{Z}/n\mathbb{Z}$.

The module also provides division and GCD functions that allow working with a composite modulus and indicate when a divisor is encountered, useful for implementing some integer factorization and primality proving algorithms.

Polynomial factorization

While at Sage Days 34 in Kaiserslautern last September, I ported the code for factoring polynomials over $\mathbb{Z}/p\mathbb{Z}$ (for word-size $p$) from FLINT 1.x to FLINT 2.x. Both the Cantor-Zassenhaus and Berlekamp algorithms are provided. Although the factoring code itself is essentially the same as in FLINT 1.x, it runs significantly faster in FLINT 2.3 due to improvements in the underlying polynomial and matrix arithmetic.

It’s tricky to compare timings, because the algorithms are randomized and input-sensitive. But typically, FLINT now appears to be faster than NTL for modular factorizations. Just as one example, taking the length-1000 polynomial whose coefficients are the successive prime numbers, and factoring it mod $p = 5$:

- NTL in Sage takes 3.6 seconds

- FLINT 1.5 in Sage (which uses Berlekamp) takes 13 seconds

- FLINT 2.3 using Berlekamp takes 0.65 seconds

- FLINT 2.3 using Cantor-Zassenhaus takes 0.94 seconds

We’re still some way behind Magma. On this particular example, FLINT 2.3 is actually breaking even (Magma takes 0.55 seconds, timed on a faster system), but Magma gets better with larger polynomials. However, the speed of factorization can be expected to improve further in a future version of FLINT. At the moment, our Cantor-Zassenhaus code does not use improvements such as sliding window exponentiation and Newton division with precomputation. More importantly still, the FLINT CZ implementation is the naive version and not the asymptotically-improved algorithm of von zur Gathen, Kaltofen and Shoup (which takes advantage of fast modular composition, mentioned in the section on power series arithmetic above). The good news is that Lina Kulakova currently is working on factorization over $\mathbb{Z}/p\mathbb{Z}$ for arbitrary-precision $p$ as her Google Summer of Code project, and as part of this she is also implementing the GKS algorithm. She is making great progress, so we will soon see the results!

At Sage Days 35, Andy Novocin and Sebastian also implemented restartable Hensel lifting and the naive Zassenhaus algorithm (exponential in the number of modular factors in the worst case), which allows factoring polynomials in $\mathbb{Z}[x]$ and $\mathbb{Q}[x]$. This should serve for most practical purposes, but in a future version, we also hope to include the subexponential Novocin-van Hoeij algorithm, which is currently implemented in FLINT 1.6 (this will require LLL, either porting the implementation in FLINT 1.6 or writing a better one from scratch). I have not done any benchmarking of the polynomial factoriation over $\mathbb{Z}$ yet.

Faster linear algebra mod $p$

All linear algebra operations modulo a word-size prime (the nmod_mat module) now use block recursive LU decomposition to reduce the calculations to matrix multiplication, instead of using naive Gaussian elimination. This affects solving, determinant, rank, row echelon form, and nullspace. Improved linear algebra mod $p$ should eventually result in a speedup for multimodular algorithms over $\mathbb{Z}$, $\mathbb{Q}$, $\mathbb{Z}[x]$, etc. (right now solving $Ax=B$ over $\mathbb{Z}$ and $\mathbb{Q}$, and, for all matrix types, computing determinants, benefits).

Block recursive LU decomposition is much faster than the usual Gaussian elimination for three reasons:

- Multiplication uses the Strassen-Winograd algorithm for large matrices so the complexity is $O(n^{2.81})$ instead of $O(n^3)$.

- Block decomposition gives much better memory locality than the usual row-by-row Gaussian elimination, substantially improving performance for large matrices.

- The order of operations when doing matrix multiplication allows some extra low-level optimizations. In particular, most modular reductions can be avoided.

Also worth pointing out is that matrix multiplication in FLINT 2.3 has been improved for very small moduli by packing several entries into each word. The nmod_mat module is still far from ideal for small moduli (it is most efficient for moduli just slightly smaller than a full 64-bit word), but this was a simple change that does yield a nice speedup in practice.

At the moment, matrix multiplication in FLINT is based entirely on integer arithmetic (with bits of inline assembly code, chiefly for x86-64). This is a factor six or so slower than using BLAS multiplication (as Sage does as of a recent version), assuming one chooses moduli small enough for the results to fit in hardware doubles. This means that FLINT lags behind by roughly a factor two or three for multimodular computation (since FLINT goes up to 64 bits, it has the advantage that it can get nearly three times the work done with a single modulus) when the matrices get large. To fix this, we plan to add BLAS support, perhaps optionally, to a future version of FLINT.

A timing example: computing the determinant of a 1000 x 1000 matrix modulo a 60-bit prime takes 0.8 seconds in FLINT 2.3, compared to 4.2 seconds in FLINT 2.2. For reference, a determinant modulo a 20-bit prime in Sage 4.8 takes 0.2 seconds.

Polynomial and integer matrices

The fmpz_mat module has been cleaned up a bit, and various functions have been made faster. The most notable improvement is in determinant calculation: instead of directly computing the determinant modulo several small primes, we first solve $Ax = b$ for a random $b$ to find a random divisor $d$ of the determinant. Then, instead of computing $\det(A)$, we compute $\det(A)/d$ which (with high probability) is very small. I’m not sure who first invented this algorithm; I borrowed the idea from Sage. Over $\mathbb{Z}$ and $\mathbb{Q}$, FLINT now computes determinants faster than Sage at least for generic matrices of dimension up to several hundred (it might be a bit slower asymptotically).

Matrices over $\mathbb{Z}[x]$ now support inverse, rank, reduced row echelon form, nullspace, and some more functions for products and exponentiation. A module nmod_poly_mat has also been added for matrices over $\mathbb{Z}/n\mathbb{Z}[x]$ for word-size $n$, with similar functionality.

Multipoint evaluation and interpolation

A useful new feature in the nmod_poly module is multipoint evaluation and interpolation — the ability to evaluate a length-$n$ polynomial on a grid of $n$ points, or recovering the polynomial from its $n$ values on the grid — in quasi-linear time $O(n \log^3 n)$.

One of the applications is to speed up linear algebra: for appropriate inputs, determinants and matrix products in the new nmod_poly_mat module are computed by evaluating the input polynomials on several points, computing the result at each point (using the fast linear algebra provided by the nmod_mat module), and then interpolating to form the output.

A fun application: as I wrote about previously, FLINT is now able to compute factorials mod $n$ in sublinear time!

A few more things

Finally, without going into detail (and still omitting several items):

- Unbalanced polynomial multiplication and division is now substantially faster and uses less memory.

- Bill has added code for computing all the square roots modulo an arbitrary word-size integer (until now FLINT only provided functions for computing the square roots modulo a prime).

- Bill added a quadratic sieve for quickly factoring two-limb integers (numbers up to 128 bits, actually slightly smaller for technical reasons, on 64-bit systems).

- Fast code has been added for computing Taylor shifts of polynomials, i.e. linear translations $P(x+c)$, very efficiently, particularly when $c = \pm 1$.

- FLINT now includes code for computing Euler’s constant $\gamma$ and the zeta constants $\zeta(n)$ with quasi-linear complexity (much faster than MPFR if you want millions of digits).

Some more benchmark results apart from those already mentioned in this post can be found on the FLINT 2 benchmarking page!

fredrikj.net |

Blog index |

RSS feed |

Follow me on Mastodon |

Become a sponsor